AWS Security, SIEM, the ELK Stack and Everything In Between

Over the past few years, centralized logging has become an integral part of implementing security on the cloud. Because it enables DevOps and IT Operations teams to understand the relationships between the different components in their stack, comprehensive log management and analysis strategy is crucial for any security strategy.

Organizations using AWS services have a large amount of auditing and logging tools at their disposal. Some of the services generate log data, auditing information and details on changes made to the configuration of the service. These distributed data sources can be tapped and used together to give a good and centralized security overview of the stack. Did anyone say SIEM?

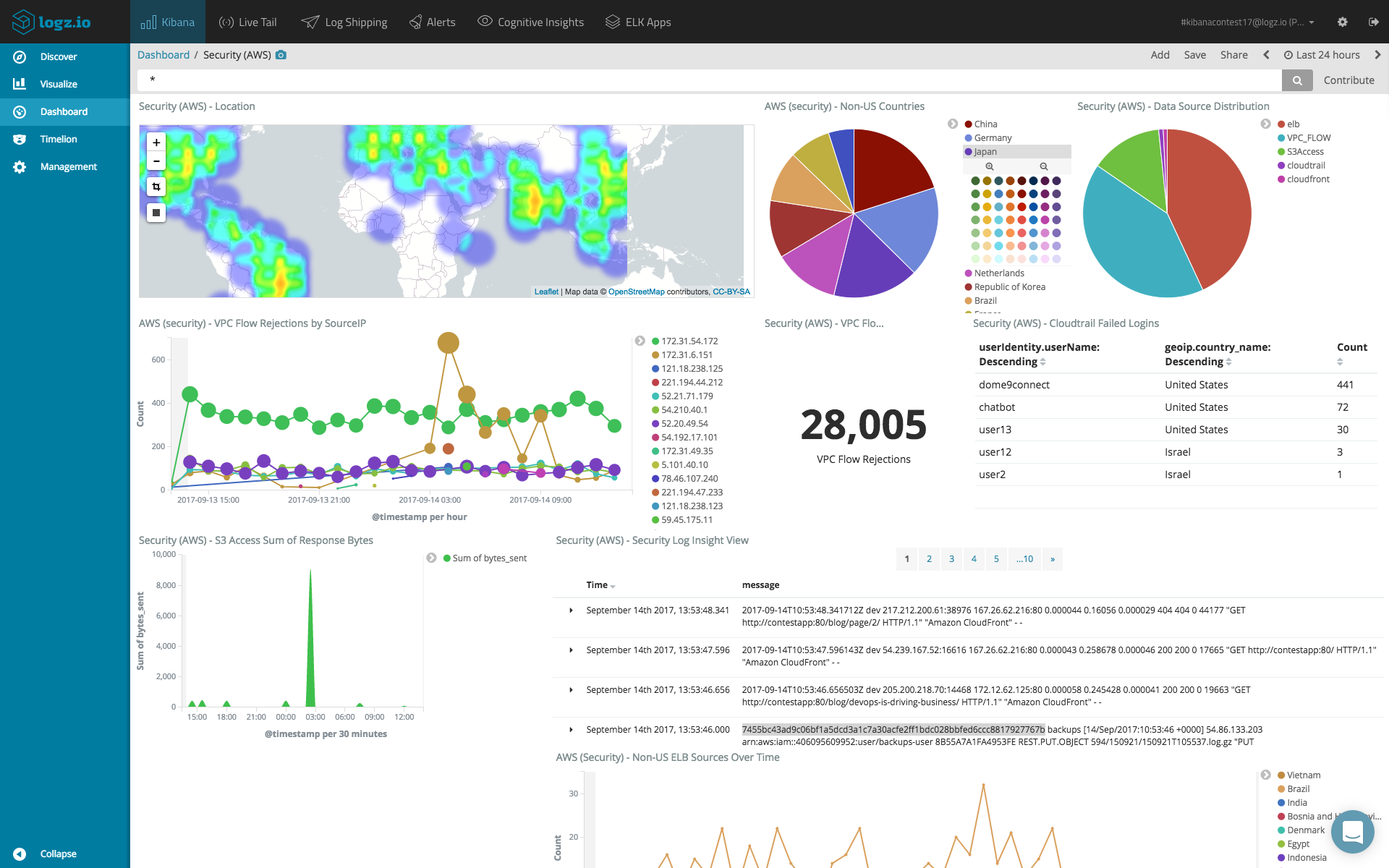

A picture is worth a thousand words.

But before you can build this kind of AWS security dashboard, there are a few bits and pieces that you need to take care of.

Where Are My Logs?

As mentioned above, many AWS services generate log data that can be aggregated and put together for event correlation. We will go into specific examples in the following section, but keep in mind that integrating with these data points requires work.

First, one needs to understand how and where to access the data itself. ELB Access logs for example need to be enabled. VPC Flow logs are shipped into a log group in CloudWatch, and so forth. In most cases, permissions need to be configured as well. So step 1 is to understand how to tap into the data provided by the AWS service.

Second, you need to figure out how to extract the data and store it. In most cases, it is stored in S3 buckets, which is great but not exactly the most accessible of services.

Third, you need to tools for easily analyzing this data, not to mention visualizing it. One cannot be expected to export compressed log files from an S3 bucket, access these files and then scan the lines for crucial information.

And last but not least, all of this data needs to be secured somehow. As long as the data resides on S3 you can be pretty confident that the log data, which can be extremely sensitive, is secure but once exported, what then?

Using ELK for Centralized Logging

“After every storm the sun will smile; for every problem there is a solution” goes the famous saying.

The ELK Stack (Elasticsearch, Logstash and Kibana) is the most commonly used solution by AWS users for centrally logging their environment.

These users might be using their own ELK deployment or they might be using AWS hosted Elasticsearch services. They also might be using a different hosted ELK solution such as Logz.io. In either case, ELK is used because it helps overcome the hurdles described above — aggregating log and audit data from the different sources, processing and enhancing this data (Logstash), storing them in one central data store (Elasticsearch), and providing analysis and visualization tools (Kibana).

Before one begins to ship data into the ELK solution of his choice, it is important to understand what data is made available by AWS services that can be used for AWS security.

Log Components for AWS Security

Let’s take a closer look at some of the data sources to use for getting a comprehensive view of an AWS-based environment. Of course, one organization’s stack will not be identical to another organization’s stack. Still, most teams will be using some of the services mentioned below.

VPC Flow Logs

VPC Flow logs allow users to log all of the traffic taking place within an AWS VPC. These logs collect information about allowed and denied traffic, source and destination IP addresses, ports, IANA protocol numbers, packet and byte counts, time intervals during which flows were observed, and actions (ACCEPT or REJECT).

These logs can be extremely useful from a security perspective — you can use them to analyze rejection rates, identify system misuses, correlate flow increases in traffic to load in other parts of the system, and to verify that only specific servers are being accessed and belong to the VPC. You can also make sure the right ports are being accessed from the right servers and receive alerts whenever certain ports are being accessed.

CloudTrail Logs

CloudTrail records activity in your AWS environment, allowing you to monitor who is doing what, when, and where. Every API call to an AWS account is logged by CloudTrail in real time, and the information includes the identity of the user, the time of the call, the source, the request parameters, and the returned components.

ELB Access Logs

Elastic Load Balancers (ELB) allow AWS users to distribute traffic across EC2 instances. ELB access logs are one of the options users have to monitor and troubleshoot this traffic.

ELB access logs are collections of information on all the traffic running through the load balancers. This data includes from where the ELB was accessed, which internal machines were accessed, the identity of the requester (such as the operating system and browser), and additional metrics such as processing time and traffic volume.

ELB logs can be used for a variety of use cases — monitoring access logs, checking the operational health of the ELBs, and measuring their efficient operation, to name a few. In the context of operational health, you might want to determine if your traffic is being equally distributed amongst all internal servers. For operational efficiency, you might want to identify the volumes of access that you are getting from different locations in the world.

S3 Access Logs

S3 access logs record events for every access of an S3 Bucket. Access data includes the identities of the entities accessing the bucket, the identities of buckets and their owners, and metrics on access time and turnaround time as well as the response codes that are returned.

From a security perspective, monitoring these logs is key. You can determine from where and how buckets are being accessed and receive alerts on illegal access of your buckets.

And so on…

There are several other AWS services that generate log data, such as CloudFront, Redshift, and RDS–and depending on how your technology stack is set up, you might want to extract and store these logs as well for implementing a tighter security protocol.

The Path to SIEM

SIEM (Security Information and Event Management) can be defined as an approach to security management based on providing organizations with a comprehensive real-time view of their IT security. This view allows these organizations to manage vulnerabilities, implement compliance policies, identify patterns and detect threats.

Logs are a main data source for gaining this comprehensive view, and centralized logging is a key tool for gathering, processing and analyzing the data. As detailed above, the ELK Stack is a means to this end, and in an AWS environment, can help users build a SIEM-based security view based on the various data made available by the different services.

Alerting

Implementing SIEM involves more than just log aggregation and management. An important element is being able to set off alerts and be notified when specific events occur. It’s important to understand that the ELK Stack does not include a built-in alerting mechanism. Therefore, if you are not using a hosted ELK service such as Logz.io, you will need to either hack your own solution or pay for additional components.

Retention

Another important element of SIEM is retention. To gain historical perspective and to be able to effectively correlate data over time, a long-term storage mechanism is required. Again, the ELK Stack does not provide an out-of-the-box retention capability, and many organizations will turn to S3 as a solution, archiving old data and reinjecting it when needed.

Forensic Tools

Implementing a SIEM solution for an AWS-based environment results in a huge amount of data. Even in a small to medium sized setup, we’re talking of millions of log lines a day. To be able to analyze this data, SIEM architects needs the right analysis tools.

Kibana allows users to query the data and build dashboards such as the one shown above. You will still need help in gaining insight from the large data sets, and a variety of tools exist for this purpose. Complementing the built-in analysis and visualization features in ELK, Logz.io has developed an artificial intelligence layer on top of ELK which can be used to identify hidden security issues within the log data.

Endnotes

In the beginning of September, thousands of files stored on AWS S3 containing sensitive information on US citizens were leaked. While there are no specific details on how these documents leaked, it would be safe to assume that a tighter security policy for managing S3 access could have helped prevent the leakage.

More on the subject:

This case is just one simple example of AWS vulnerabilities, but it stresses the need for tighter security in cloud environments. Centralized logging and the ELK Stack are a key component of any security strategy on AWS, whether for implementing SIEM or another security protocol.

When approaching the subject of security on AWS, ask yourself the following questions: 1) What services in my stack generate log and audit data 2) How do I extract and aggregate this data 3) If centralized logging and ELK is the chosen path, do I need more than the provided capabilities?

Get started for free

Completely free for 14 days, no strings attached.