Exploring Elasticsearch Vulnerabilities

Whether an active member of the ELK community or just a happy user, you’ve probably heard of a recent data breach involving Elasticsearch. Indeed, not a month goes by where we don’t come across an article or research showcasing a set of sensitive information exposed on an Elasticsearch cluster.

A substantial amount of this research into vulnerable Elasticsearch instances is conducted by Bob Diachenko, a security analyst and consultant at Security Discovery. For example, a big discovery in the beginning of the year involved millions of sensitive files, including home loan applications, credit reports, bankruptcy records, and more.More on the subject:

I asked Bob a few questions about his work, his findings on Elasticsearch vulnerabilities and his general outlook on the future.

tl;dr – Read the manual and don’t be lazy!

What motivated you to begin researching Elasticsearch security breaches?

It all started back in 2015, when a company I was working for experienced a serious data breach. I was in charge of cleaning up the mess after the fact and communicating an “all is safe and secure now” message to our customers, this event became a turning point in my career. I decided to take a closer look into data breaches — how they occur, what technologies are especially vulnerable and why, and how best to mitigate these occurrences.

Since then, I’ve focused on taking a look at open source NoSQL databases and their weaknesses, specifically Elasticsearch, MongoDB and CouchDB, but also other smaller and less popular databases. My goal is to both try and stay ahead of malicious actors targeting these exposed databases, alert users when possible and raise awareness.

Can you explain a bit about how you conduct your research?

My methodology is pretty simple. I use a set of search engines that crawl the web for exposed IPs and ports. Similar to how Google crawls the web for websites, these tools crawl the web for connected and exposed endpoints.

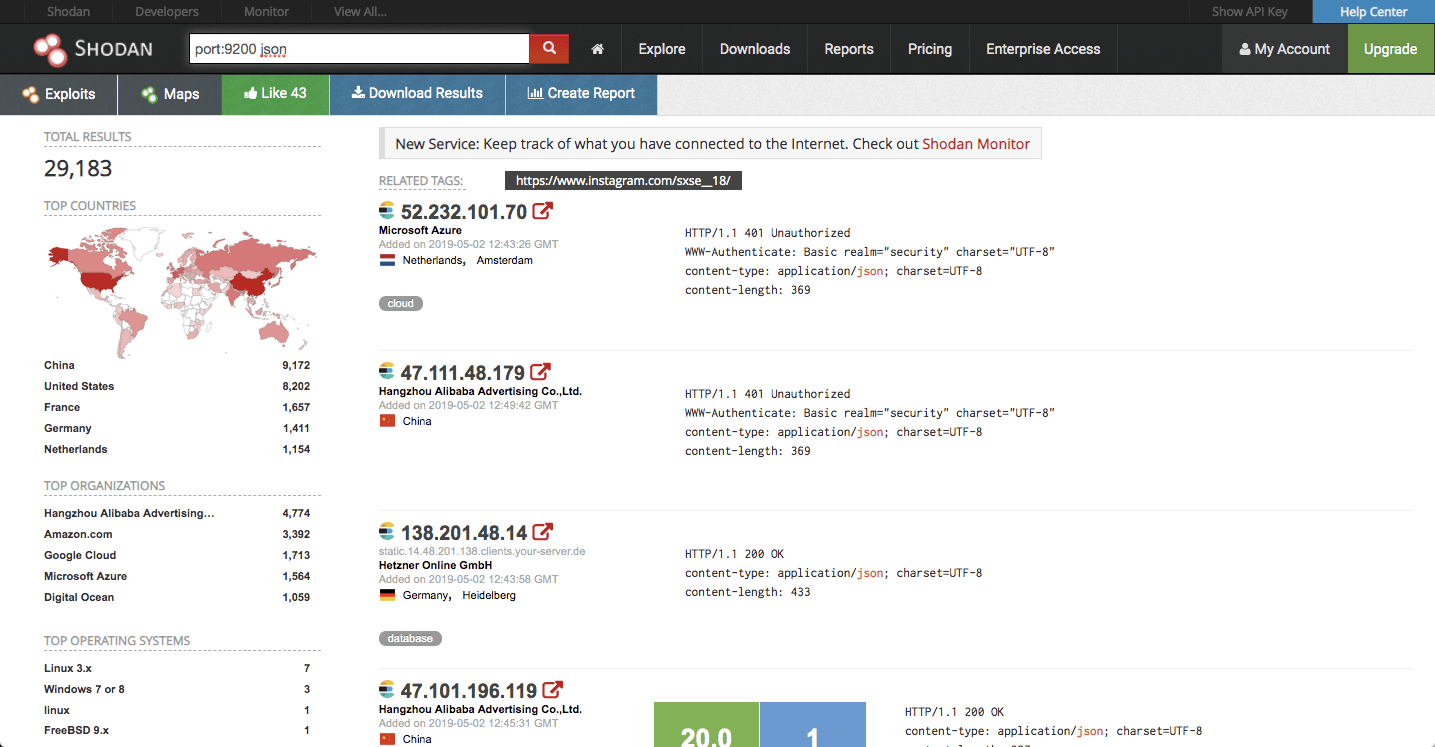

Using Shodan for example, one can simply see a list of IPs of exposed databases that can be accessed easily via a browser:

I also use tools such as BinaryEdge, Censys, ZoomEye and others.

Elasticsearch has been mentioned a lot in recent reports on data breaches. Do you see this in your data?

I would estimate that out of all the data breaches I’ve identified, 60% can be traced to exposed Elasticsearch instances, 30% are MongoDB-related, and the rest are equally distributed across the other databases. So yes, Elasticsearch is most definitely the most vulnerable NoSQL database showing up in my research.

Are there any specific regions or deployment types where you see a relative large amount of vulnerable Elasticsearch instances?

This probably won’t surprise anyone but Elasticsearch instances installed either on AWS or Microsoft Azure are the most exposed, with the U.S and China leading the pack in terms of geographical regions. I suspect the popularity of AWS as a deployment method for installing Elasticsearch and the sheer amount of Azure users are what are driving these numbers.

So what makes Elasticsearch so vulnerable compared to the other databases coming up in your research?

It all goes down to the default security configurations available or provided by the open source distribution of the database. Elasticsearch specifically, does not provide a built-in authentication and authorization mechanism and requires further investment and configuration on the user’s side. Sure, there are some basic steps that can be taken to secure Elasticsearch, but users are either not aware or are simply too lazy to bother.

How do you see this developing in the future?

The sad truth is that I don’t see the situation improving in the years ahead. As long as human beings are the ones installing Elasticsearch and making the configurations, that is. Humans are humans. A lot of developers look for the easiest way to get started and that does not involve setting up proper security for Elasticsearch.

We have to end this interview with a positive message! What best practices do you recommend for organizations using Elasticsearch?

At the end of the day, it’s all in the documentation. Of course organizations cannot force their employees to read the Elasticsearch user manuals but elaborate internal processes can be put in place to make sure ports are not open and safe configurations are used as a standard.

If you’re limited by budget, you can use simple tactics like not using default ports, binding Elasticsearch to a secure IP, or deploying a proxy like nginx in front of Elasticsearch. If you can invest some time and resources, there are a few open source options such as SearchGuard and if you have a bit of a budget you can, of course, go for a fully managed solution like Logz.io.

Security Discovery was founded by a team of cyber security researchers and offers news, best practices, consulting services and more. Members of the Security Discovery security team have identified data breaches that were covered by news outlets such as the BBC, Forbes, Financial Times, Washington Post, Engadget, TechCrunch, NYDaily News, and many more. Security Discovery reports on data breaches discovered and offers unique perspectives on how they occurred and who may have been impacted.

Get started for free

Completely free for 14 days, no strings attached.