Using the Mutate Filter in Logstash

July 9, 2019

One of the benefits of using Logstash in your data pipelines is the ability to transform the data into the desired format according to the needs of your system and organization. There are many ways of transforming data in Logstash, one of them is using the mutate filter plugin.More on the subject:

This Logstash filter plugin allows you to force fields into specific data types and add, copy, and update specific fields to make them compatible across the environment. Here’s a simple example of using the filter to rename an IP field HOST_IP.

...

mutate {

rename => { “IP” => “HOST_IP” }

}

...

In this article, I’m going to explain how to set up and use the mutate filter using three examples that illustrate the types of field changes that can be executed with it.

The basics

The mutate filter plugin (a binary file) is built into Logstash. You can verify that with the following commands:

cd /usr/share/logstash/bin ./logstash-plugin list | grep -i mutate

The output will be:

logstash-filter-mutate

The mutate filter and its different configuration options are defined in the filter section of the Logstash configuration file. The available configuration options are described later in this article. Before diving into those, however, let’s take a brief look at the layout of the Logstash configuration file.

Generally, there are three main sections of a Logstash configuration file:

- Input – this is where the source of data to be processed is identified.

- Filter – this is where the fields of the incoming event logs can be transformed and processed.

- Output – this is where parsed data will be forwarded to.

More information about formatting the Logstash configuration file can be found here.

In the example below, we’re adding a tag (Apache Web Server) to incoming apache access logs with a condition that the source path contained the term “apache”. Note the mutate filter added in the filter section of the Logstash configuration file:

input {

file {

path => "/var/log/apache/apache_access.log"

start_position => "beginning"

sincedb_path => "NULL" }

}

filter {

if [ source ] =~ /apache/ {

mutate {

add_tag => [ "Apache Web Server" ] }

}}

output {

elasticsearch { hosts => ["localhost:9200"] }

}

Mutate Filter Configuration Options

There are a number of configuration options which can be used with the mutate filter, such as copy, rename, replace, join, uppercase, and lowercase.

By default, the Logstash mutate filter has its own sort of order of operations. You can customize the order by configurating mutate blocks, but let’s look at the order first.

They are outlined in the table below:

Configuration Options

1. coerce – this sets the default value of an existing field but is null

2. rename – rename a field in the event

3. replace – replace the field with the new value

4. update – update an existing field with new value

5. convert – convert the field value to another data type

6. gsub – This config options will find and replace substitutions in strings. This only affects strings or an array of strings.

filter {

mutate {

gsub => [

# replace all dollar signs with euros

"fieldname1", "$", "€",

# replace backslashes and forward slashes with a hyphen

"fieldname2", "[\\/]", "-"

]

}

}

#Note: You can also use regex in gsub. To make sure regex applies only to a certain argument, surround that argument with "(?<=argument)".

7. uppercase – this converts a string field to its uppercase equivalent

8. capitalize – this converts a string field to its capitalized equivalent

9. lowercase – convert a string field to its lowercase equivalent

10. strip – remove the leading and trailing white spaces

11. remove

12. split – This splits an array using a separating character, like a comma

13. join – This will join together an array with a separating character like a comma

filter {

mutate {

split => { "fieldname1" => "," }

}

}

filter {

mutate {

join => { "fieldname2" => "," }

}

}

14. merge – this merges two arrays together or two hashes. Strings automatically convert to arrays, so you can enter an array and a string together and it will merge two arrays. You cannot merge an array with a hash with this option.

15. copy – Copy the an existing field to a second field. When using this option, the original existing field will be erased.

Other options include:

- add_field – add a new field to the event

- remove_field– remove an arbitrary field from the event

- add_tag – add an arbitrary tag to the event

- remove_tag – remove the tag from the event if present

- id – add a unique id to the field event

- enable_metric – This Logstash configuration option will enable or disable metric logging on only this instance. This is a boolean, true or false.

- periodic_flush – Also a boolean, this calls the filter flush method at regular intervals.

Simple and Conditional Removals

Performing a Simple Removal

In this example, we want to remove the “Password” field from a small CSV file with 10-15 records. This type of removal can be very helpful when shipping log event data that includes sensitive information. Payroll management systems, online shopping systems, and mobile apps handling transactions are just a few of the applications for which this action is necessary.

The configuration below will remove the field “Password”:

input {

file {

path => "/Users/put/Downloads/Mutate_plugin.CSV"

start_position => "beginning"

sincedb_path => "NULL"

}

}

filter {

csv { autodetect_column_names => true }

mutate {

remove_field => [ "Password" ] }

}

output {

stdout { codec => rubydebug }

}

After the code has been added, run the Logstash config file. In this case, the final outcome will be shown in the terminal since we are printing the output on stdout.

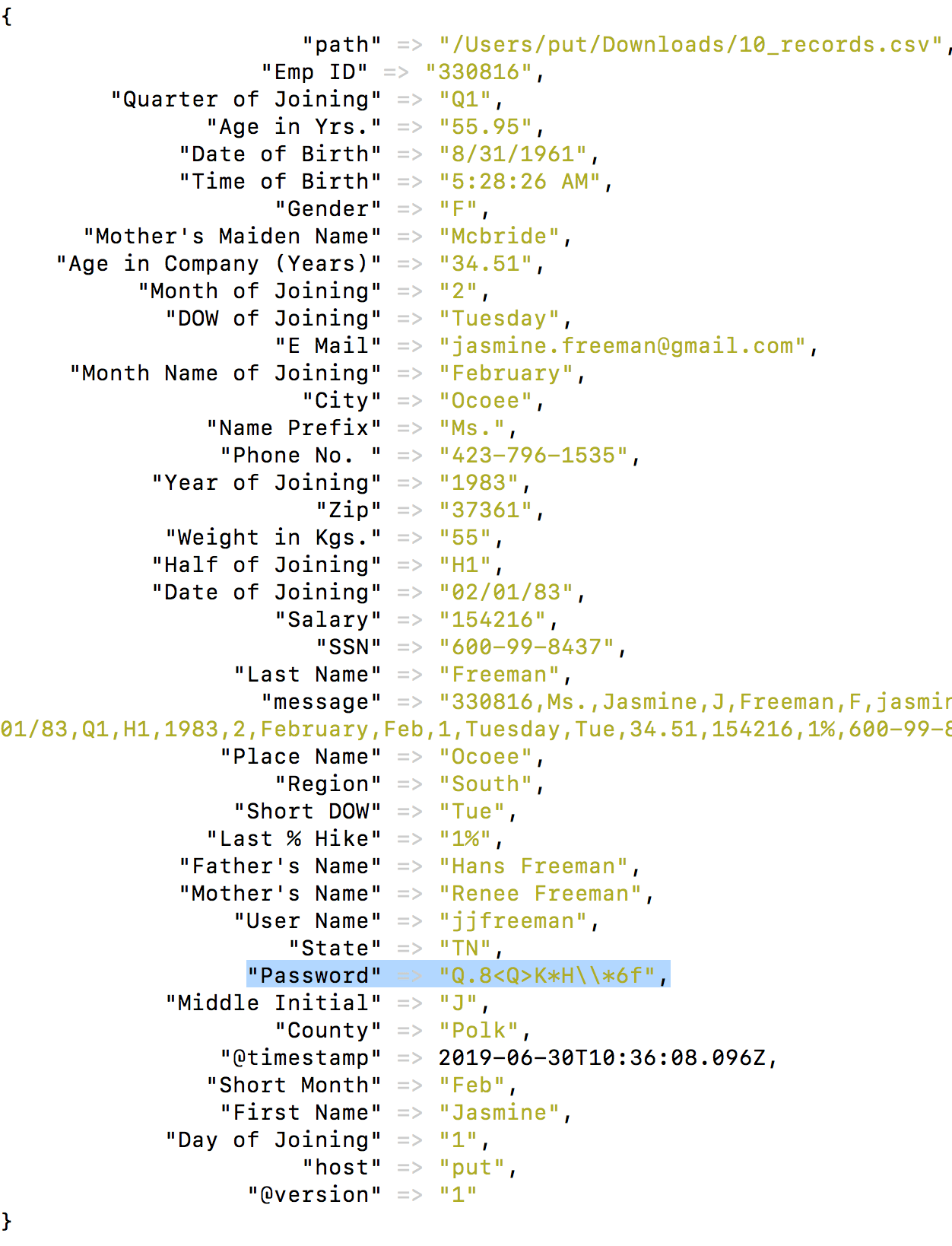

As seen below, the “Password” field has been removed from the events:

Performing a Conditional Removal

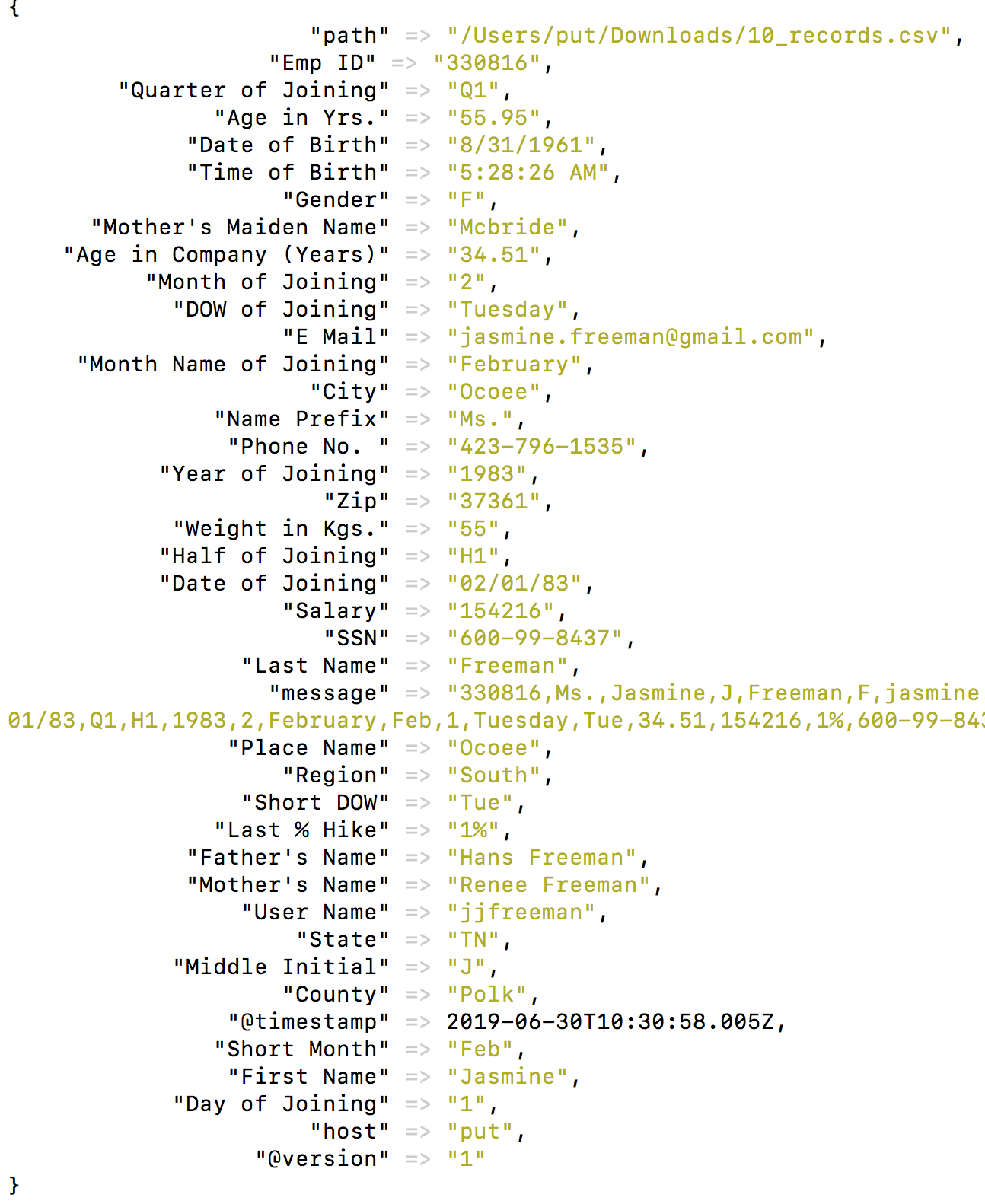

In this example, the field “Password” is again being removed from the events. This time, however, the removal is conditioned by the salary, if [Salary] == “154216.”

The code below will remove the field “Password” using the condition specified earlier:

input {

file {

path => "/Users/put/Downloads/Mutate_plugin.CSV"

start_position => "beginning"

sincedb_path => "NULL"

} }

filter {

csv { autodetect_column_names => true }

if [Salary] == "154216" {

mutate {

remove_field => [ "City" ] } }

}

output {

stdout { codec => rubydebug }

}

Now, run Logstash with this configuration code. The result of this conditional removal is shown below:

Merging Fields

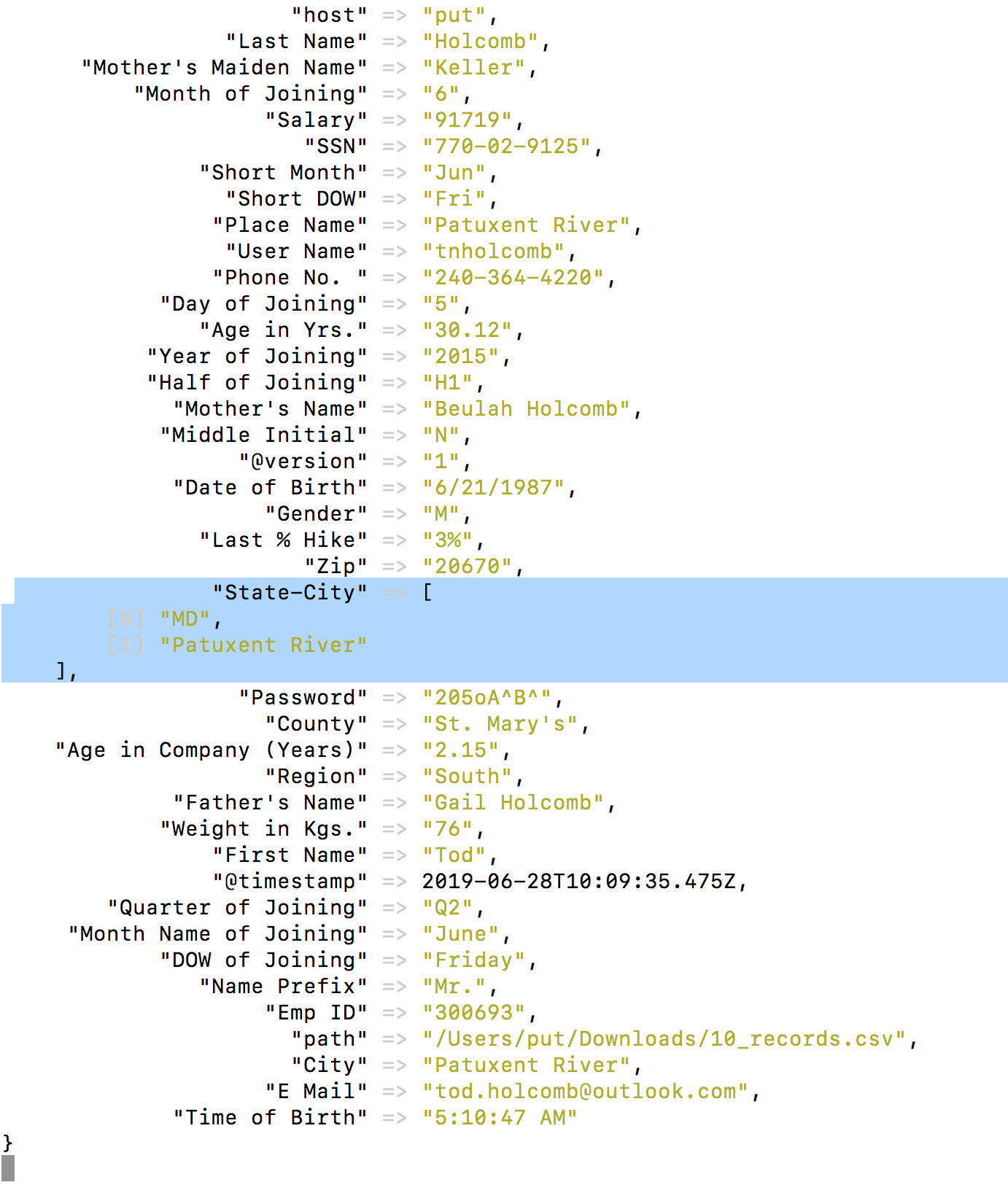

In this example, we’re going to use the mutate filter to merge two fields, “State” and “City” using the MERGE option.

After merging the two, the “State” field will have the merged data in an array format. In addition, in order to make things clear, we will also RENAME the field as shown in the code below:

input {

file {

path => "/Users/put/Downloads/Mutate_plugin.CSV"

start_position => "beginning"

sincedb_path => "NULL" }}

filter {

csv { autodetect_column_names => true }

mutate {

merge => { "State" => "City" } }

mutate {

rename => [ "State" , "State-City" ] } }

output {

stdout { codec => rubydebug }

}

Run the Logstash config file shown below to yield the merged data as shown below:

Adding White Spaces

Next, we’re going to use the mutate filter to add white spaces to the “message” field of incoming events. Currently, there is no space in the values of the “message” field. We will use mutate filter’s “GSUB” option as shown in the code below:

input {

file {

path => "/Users/put/Downloads/Mutate_plugin.CSV"

start_position => "beginning"

sincedb_path => "NULL"

}

}

filter {

csv { autodetect_column_names => true }

mutate {

gsub => [ "message", "," , ", " ]

}

}

output {

stdout { codec => rubydebug }

}

Run the Logstash configuration to see the added white spaces in the message field, as shown below:

Endnotes

This article has demonstrated how a mutate filter can create new fields in a data set as well as replace and rename existing fields. There are many other important filter plugins in Logstash which can also be useful while parsing or creating visualizations.

- JSON—used to parse the JSON events.

- KV—used to parse the key-value pairs.

- HTTP—used to integrate external APIs.

- ALTER—used to alter fields which are not handled by a mutate filter.