5 Hosted Kubernetes Platforms

July 5, 2018

As an open-source system for automating deployment, scaling, and management of containerized applications, Kubernetes has grown immensely in popularity. Increasingly, we are also beginning to come across platforms offering Kubernetes as both a hosted and managed service.

In this article, we are going to lay out some differences between hosted and self-hosted services and analyze five popular services currently available: Google’s Kubernetes Engine, Azure Kubernetes Service (AKS), Amazon’s Elastic Container Service for Kubernetes (Amazon EKS), and IBM’s Cloud Container Service and Rackspace.

Hosted vs. Self Hosted

Kubernetes is the industry-leading, open-source container orchestration framework. Created by Google and currently maintained by the Cloud Native Computing Foundation (CNCF), it took the container industry by storm thanks to all its experience in handling large clusters. Currently, it is being adopted by enterprises, governments, cloud providers, and vendors thanks to its active community and feature-set.

Self-hosting Kubernetes can be extremely difficult if you do not have the necessary expertise. There are a large number of network, service discovery setups, and Linux configurations on several machines. Moreover, since Kubernetes manages your entire infrastructure, you have to keep it updated to guard against attacks. Beginning with Version 1.8, Kubernetes has provided a tool called kubeadm that allows a user to run Kubernetes on a single machine in order to test it.

On the other hand, if you opt for a hosted, or managed Kubernetes, it doesn’t require that you know a lot or have a great deal of experience with infrastructure. You just need to have a subscription in a cloud provider and it will deploy and keep everything running and updated for you.

When you are ready to scale up to more machines and higher availability, you’ll find that a hosted solution is the easiest to create and maintain and that there is a whole world of possibilities and services to choose from. In the next section, we will talk about the most popular hosted services on the market and analyze them one by one.

Hosted Services

I’ve selected five hosted services and will analyze them separately and compare them with some popular Kubernetes features so you can decide for yourself.

Google’s Cloud Kubernetes Engine

Google’s Kubernetes engine is one of the oldest Kubernetes-hosted services available. Since Google is the original creator of Kubernetes, it is one of the most advanced Kubernetes managers, with a wide array of features available.

Azure Kubernetes Service (AKS)

Azure Kubernetes Service is the Microsoft solution for hosting Kubernetes. The service was recently made available to the general public, but Microsoft previously offered an older version of its managed service called Azure Container Service. With the older service, the user was able to choose between Kubernetes, DC/OS, and Docker Swarm, but it does not have the level of detail available for Kubernetes on its new service.

Amazon’s Elastic Container Service for Kubernetes (Amazon EKS)

Amazon’s EKS is one of the latest services available. Recently, Amazon accepted the challenge of creating its own managed Kubernetes instance instead of a proprietary one. The service is Kubernetes certified and can manage several AWS regions in a single cluster (more details in the next section).

IBM’s Cloud Container Service

IBM’s Cloud Container Service has been available since March 2018, so it is one of the oldest-managed services available on the major clouds. Though not as popular as the three names before, the IBM Cloud is experiencing rapid growth and popularity over enterprise companies.

Rackspace KAAS

Rackspace Kubernetes as a service was launched in June 2018 and still has a lot of the best Kubernetes features as we shall see in the next section. Rackspace is a multi-cloud consulting company and therefore can provide solutions such as multi-cloud portability through many other clouds.

Hosted Services Comparison

In this section, I’ve selected a few Kubernetes characteristics and compare how they are implemented on each hosted service mentioned in the previous section.

| Feature \ Service | Google Cloud Kubernetes Engine | Azure Kubernetes Service | Amazon Elastic Container Service for Kubernetes | IBM Cloud Container Service | Rackspace KAAS |

| Automatic Update | Auto or On-demand | On-demand | N/A | On-Demand | N/A |

| Load Balancing and Networking | Native | Native | Native | Native | Native |

| Auto-scaling nodes | Yes | No, but with your own configuration | Yes | No | No |

| Node-groups | Yes | No | Yes | No | N/A |

| Multiple Zones and Regions | Yes | No | No | Yes | N/A |

| RBAC | Yes | Yes | Yes | Yes | Yes |

| Bare metal nodes | No | No | Yes | Yes | Yes |

Automatic Update

To update your cluster version to the latest one you will need to employ automatic updates. Google cloud offers automatic updates, with no manual operation required and Azure and IBM offer on-demand version upgrades. It is not clear how AWS and Rackspace will work since the latest version has been available (v1.10) since its launch.

Load Balancing and Networking

There are two types of load balancing: internal and public services. As its name says, an internal load balancer distributes calls between container instances while the public ones distribute the container instances to the external cluster world.

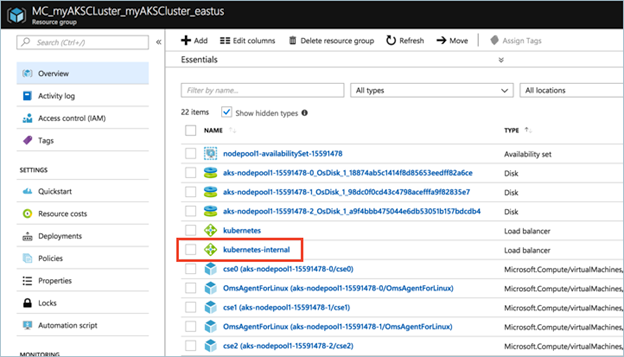

Native load balancers means that the service will be balanced using own cloud structure and not an internal, software-based, load balancer. Figure 1 shows an Azure Dashboard with a cloud-native load balancer being used by the Kubernetes solution.

Figure 1 – Native load balancer

Auto-scaling

Kubernetes can natively scale container instances to address performance bottlenecks, which is one of its major features and is part of any Kubernetes hosted or self-hosted version. Nevertheless, when it comes to increasing or decreasing the number of nodes (VM’s) running on the cluster depending on the resource utilization, only a few hosted services provide a solution.

The auto-scaling solution provides an easy way to reduce cluster costs when your workload varies during the day. For example, if your application is mostly used during commercial hours, Kubernetes would increase the node count to provide more CPU and memory during peak hours while decreasing to just one node after peak hours. Without autoscaling, you would have to manually change the number of nodes or leave it high (and pay more) to be able to handle peak hours.

Google Cloud provides an easier solution: you can adjust the GUI or the CLI, specify the VM size, and the minimum and maximum number of nodes. Everything else is managed by the provider. Amazon EKS takes second place, it uses AWS’s own autoscaler that can be used for anything on its cloud but it is more difficult to configure compared to Google Cloud. Azure lacks this feature , but a tool called Kubernetes autoscale, provided by Kubernetes itself, gives you everything you need. IBM and Rackspace clouds, unfortunately, do not provide this feature.

Node pools

Node pools are useful Kubernetes features that allow you to have different types of machines in your cluster. For example, a database instance would require faster storage, while a CPU heavy software would not require a faster storage at all.

Once again, Google Cloud already has this feature and Amazon EKS is following their lead. Azure has it on its roadmap and promises it will deliver by the end of the year. Currently there is no information via IBM and Rackspace as to when exactly it will be available.

Multiple zones

Multiple zones allow the cluster to be in more than one region over the world. This allows lower latency per request and, sometimes, reduced costs. The cluster can be configured to direct the nearby node to respond to a request.

Currently, only Google Cloud and IBM cloud provide multiple regional zones.

RBAC

Role-based access control (RBAC) provides a way for admins to dynamically configure policies though Kubernetes’s API. All of the evaluated, hosted services provide RBAC implementations.

Bare metal clusters

Virtual Machines are computers that sit in an emulation layer between the machine and the physical hardware. This highly optimized durable layer, increases computing consumption. Containers are similar to VM when compared in terms of portability and sand-boxing, but they can touch the hardware directly, which is a huge advantage.

Bare metal machines are just the physical hardware for rent. They are quite complex to deploy and payment differs from the VM’s due to the higher operational cost. Currently, only two cloud providers allow cluster nodes to be a bare metal machines: IBM, Rackspace, and AWS.

Conclusion

Kubernetes is a shining new start to the devops area. As such, almost all major cloud providers are in a race to provide better and easier solutions for Kubernetes. Managing Kubernetes itself can be difficult and costly, and any degree of auto-management sufficiently reduces costs and improves reliability.

Using Kubernetes also provides an effective way to avoid vendor-lock-in solutions: since every cloud provider already has its own Kubernetes instance, it is easier to change providers while maintaining all your scripts.

Due to Google’s leadership and involvement with Kubernetes, Google Cloud would be a good option for any new cluster: the majority of features have been available from the beginning and new updates are applied quickly. For larger deployments, try and avoid services that ignore node groups – each image has different requirements that will be better suited in different machines. In the case of CPU-bound processes, such as batch processing or big data analysis, solutions that provides bare metal machines (e.g. IBM and Rackspace) might provide better performance.