Grok Pattern Examples for Log Parsing

March 24, 2022

Searching and visualizing logs is next to impossible without log parsing, an under-appreciated skill loggers need to read their data. Parsing structures your incoming (unstructured) logs so that there are clear fields and values that the user can search against during investigations, or when setting up dashboards.

The most popular log parsing language is Grok. You can use Grok plugins to parse log data in all kinds of log management and analysis tools, including the ELK Stack and Logz.io. Check out our Grok tutorial here.

But parsing logs with Grok can be tricky. This blog will examine some Grok pattern examples, which can help you learn how to parse your log data.

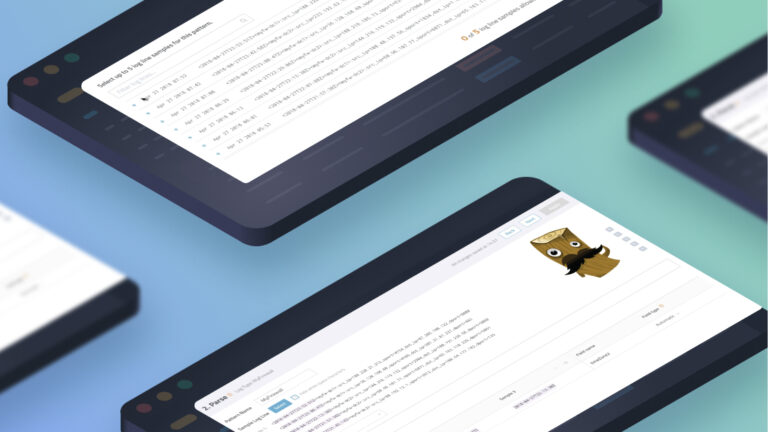

Before we get to an example, here is a quick overview of what its like to use Logz.io for log parsing:

To learn more how Logz.io automatically manages the entire log data pipeline at any scale – from ingestion, to parsing, to storage, to analysis – check out Logz.io’s Log Management-as-a-service solution.

Let’s start Grok

Let’s start with an example unstructured log message, which we will then structure with a Grok pattern:

128.39.24.23 - - [25/Dec/2021:12:16:50 +0000] "GET /category/electronics HTTP/1.1" 200 61 "/category/finance" "Mozilla/5.0 (compatible; MSIE 9.0; Windows NT 6.1; Trident/5.0)"Imagine searching through millions of log lines that look like that! It seems terrible. And that’s why we have parsing languages like Grok – to make the data easier to read, and easier to search.

A much easier way to view that data – and to search through it with analysis tools like Kibana – is to break it down into fields with values, like the list below:

- ip:128.39.24.23

- timestamp:25/Dec/2021:12:16:50 +0000

- verb:GET

- request:/category/electronics HTTP/1.1

- status: 200

- bytes: 61

- referrer:/category/finance

- os: Windows

Let’s use an example Grok pattern to generate these fields. The following sections will show the Grok pattern syntax to generate each one of the fields above.

With each section, our Grok pattern will expand as it includes more fields to parse. The patterns are regexes that GROK can read – we can use them to phrase our message.

Below are some helpful links to get started with some grok patterns. But we’ll provide more examples throughout the rest of the blog.

GROK pattern

GROK Debugger

Let’s get started building a Grok pattern to structure the data.

We describe the beginning of the pattern by using: ^

The syntax goes like this: %{pattern:Name_of_the_field}

PLEASE NOTE: It’s not recommended to use space to describe the field’s name.

1. Extracting an IP

Let’s say we want to extract the IP, we can use the IP method:

^%{IP:ip}

Then we have these: – –

To tell Grok to ignore them, we just add them to our pattern.

^%{IP:ip} – –

This pattern gives us this field:

Ip:128.39.24.23

One field down. Seven to go!

2. Timestamps and Arrays

In the next part of our unstructured log message, we have the timestamp “trapped” inside an array:

[25/Dec/2021:12:16:50 +0000]

To extract it, we need to use regex and the HTTPDATE method, while adding the brackets on the outside so Grok knows to ignore them:

\[%{HTTPDATE:timestamp}\]

Building on our previous pattern, we now have:

^%{IP:ip} – – \[%{HTTPDATE:timestamp}\]

That gives us:

ip: 128.39.24.23

timestamp: 25/Dec/2021:12:16:50 +0000

Going back to our original unstructured message, it looks like we have a space where the timestamp ends, and when the “GET starts. We need to tell Grok to ignore spaces as well. To do that, we just hit the spacebar on our keyboard or we can use %{SPACE} -> that catches until 4 spaces.

3. Extracting Verbs

Time to extract the GET field. First, we need to tell Grok to ignore the quotation marks – Then use the WORD method, we will do that by writing:

“%{WORD:verb}

So, now our pattern reads:

^%{IP:ip} – – \[%{HTTPDATE:timestamp}\] “%{WORD:verb}

That gives us these fields.

ip:128.39.24.23

timestamp:25/Dec/2021:12:16:50 +0000

verb: GET

4. Extracting Request

In order to extract the request -> /category/electronics HTTP/1.1″, we need to use the DATA method, which is essentially the wild card in regex.

This means we need to add a stopping point to extract this information to tell the DATA method where to stop – otherwise, it won’t capture any of the data. We can use the quotation marks as a stop mark:

%{DATA:request}”

Now, we have the following grok pattern:

^%{IP:ip} – – \[%{HTTPDATE:timestamp}\] “%{WORD:verb} %{DATA:request}”

That gives us these fields:

ip:128.39.24.23

timestamp:25/Dec/2021:12:16:50 +0000

verb: GET

request:/category/electronics HTTP/1.1

5. Extracting the Status

Next up is the status, but again we have a space between the end of the request and the status, we can add a space or %{SPACE}. To extract numbers, we use the NUMBER method.

%{NUMBER:status}

Now our pattern extends to:

^%{IP:ip} – – \[%{HTTPDATE:timestamp}\] “%{WORD:verb} %{DATA:request}” %{NUMBER:status}

That gives us:

ip:128.39.24.23

timestamp:25/Dec/2021:12:16:50 +0000

verb: GET

request:/category/electronics HTTP/1.1

status: 200

6. Extracting the Bytes

In order to extract bytes we need to use the NUMBER method again, but before that, we need to use a regular space or %{SPACE}

%{NUMBER:bytes}

^%{IP:ip} – – \[%{HTTPDATE:timestamp}\] “%{WORD:verb} %{DATA:request}” %{NUMBER:status} %{NUMBER:bytes}

That gives us:

ip:128.39.24.23

timestamp:25/Dec/2021:12:16:50 +0000

verb: GET

request:/category/electronics HTTP/1.1

status: 200

bytes: 6

The end is in sight.

7. Extracting the referrer

To extract “referrer” we need to use a regular space or %{SPACE} -> “ so GROK will ignore it:

%{DATA:referrer}”

As you can see, we added DATA stops when it sees “

^%{IP:ip} – – \[%{HTTPDATE:timestamp}\] “%{WORD:verb} %{DATA:request}” %{NUMBER:status} %{NUMBER:bytes} “%{DATA:referrer}”

That gives us:

ip:128.39.24.23

timestamp:25/Dec/2021:12:16:50 +0000

verb: GET

request:/category/electronics HTTP/1.1

status: 200

referrer:/category/finance

8. Ignoring data and extracting OS

Now we’d like to IGNORE this data ->” “Mozilla/5.0 (compatible; MSIE 9.0;

To do this, we will use the DATA method for “Mozilla/5.0 but without writing the field it will ignore it.

Then we will use the WORD method without the colon or field name to ignore (compatible;

Then finally, we will use the DATA to ignore MSIE 9.0;

This leaves us with the following pattern to ignore that data

%{DATA}\(%{WORD};%{DATA};

To explain this pattern a bit more…

\( -> stops until it reaches (

; -> stops until it reaches ;

And now we can use the WORD method to extract the os -> %{WORD:os}

That’s it! Now we’re left with the following grok pattern to structure our data.

^%{IP:ip} – – \[%{HTTPDATE:timestamp}\] “%{WORD:verb} %{DATA:request}” %{NUMBER:status} %{NUMBER:bytes}

“%{DATA:referrer}”%{DATA}\(%{WORD};%{DATA}; %{WORD:os}

And that gives us these tidy fields we can use to more easily search and visualize our log data:

ip:128.39.24.23

timestamp:25/Dec/2021:12:16:50 +0000

verb: GET

request:/category/electronics HTTP/1.1

status: 200

referrer:/category/finance

os:Windows

This is what our pattern looks like in Grok.

How do we use the pattern we just created?

Now that we have a Grok pattern, we can implement it in a variety of grok processors. These can be found in plugins with log shippers like Fluentd, or with services like Logz.io’s Sawmill.

Logz.io is a centralized logging and observability platform that uses a service called Sawmill to parse the incoming data. We can use Logz.io’s self-service parser to implement this pattern in Sawmill, which will parse the log data coming into your Logz.io account.

Find the self-service parser here. And learn more about the service here.

Here is our final grok pattern example, which we can implement in any tool that parses logs with Grok.

{

"steps": [

{

"grok": {

"config": {

"field": "message",

"patterns": [

"^%{IP:ip} - - \\[%{HTTPDATE:timestamp}\\] \"%{WORD:verb} %{DATA:request}\" %{NUMBER:status} %{NUMBER:bytes} \"%{DATA:referrer}\"%{DATA}\\(%{WORD};%{DATA}; %{WORD:os}"

]

}

}

}

]}And here is what it looks like to implement our grok pattern into Logz.io’s self-service parser.

Now, all of our incoming logs will be structured into fields, making them easy to search and visualize. For example, if we are using Logz.io as a centralized logging tool, we could pull up our unsuccessful request logs by simply searching “status:400 OR status:500” in the search bar.

Logz.io also offers parsing-as-a-service. Within your Logz.io account, simply reach out to a Customer Support Engineer through the chat in the bottom right corner, and ask them to parse your logs. They’d be happy to help.