AWS S3 Bucket

These instructions support collecting logs and metrics from S3 bucket

Logs

Some AWS services can be configured to ship their logs to an S3 bucket, where Logz.io can fetch those logs directly.

Which shipping method is right for you

- If your data is organized in alphabetical order, you should opt for the S3 fetcher. Logz.io operates this fetcher on our end, directly accessing your S3 to retrieve the data.

- If your data is not organized in alphabetical order, use the S3 hook. This approach requires deploying a Lambda function within your environment to manage the process.

Shipping logs via S3 Fetcher

In case your S3 bucket is encrypted, you need to add kms:Decrypt to the policy on the ARN of the KMS key used to encrypt the bucket.

Best practices

The S3 API does not allow retrieval of object timestamps, so Logz.io must collect logs in alphabetical order. Please keep these notes in mind when configuring logging.

Make the prefix as specific as possible \ The prefix is the part of your log path that remains constant across all logs. This can include folder structure and the beginning of the filename.

The log path after the prefix must come in alphabetical order \ We recommend starting the object name (after the prefix) with the Unix epoch time. The Unix epoch time is always increasing, ensuring we can always fetch your incoming logs.

The size of each log file should not exceed 50 MB \ To guarantee successful file upload, make sure that the size of each log file does not exceed 50 MB.

Configure Logz.io to fetch logs from an S3 bucket

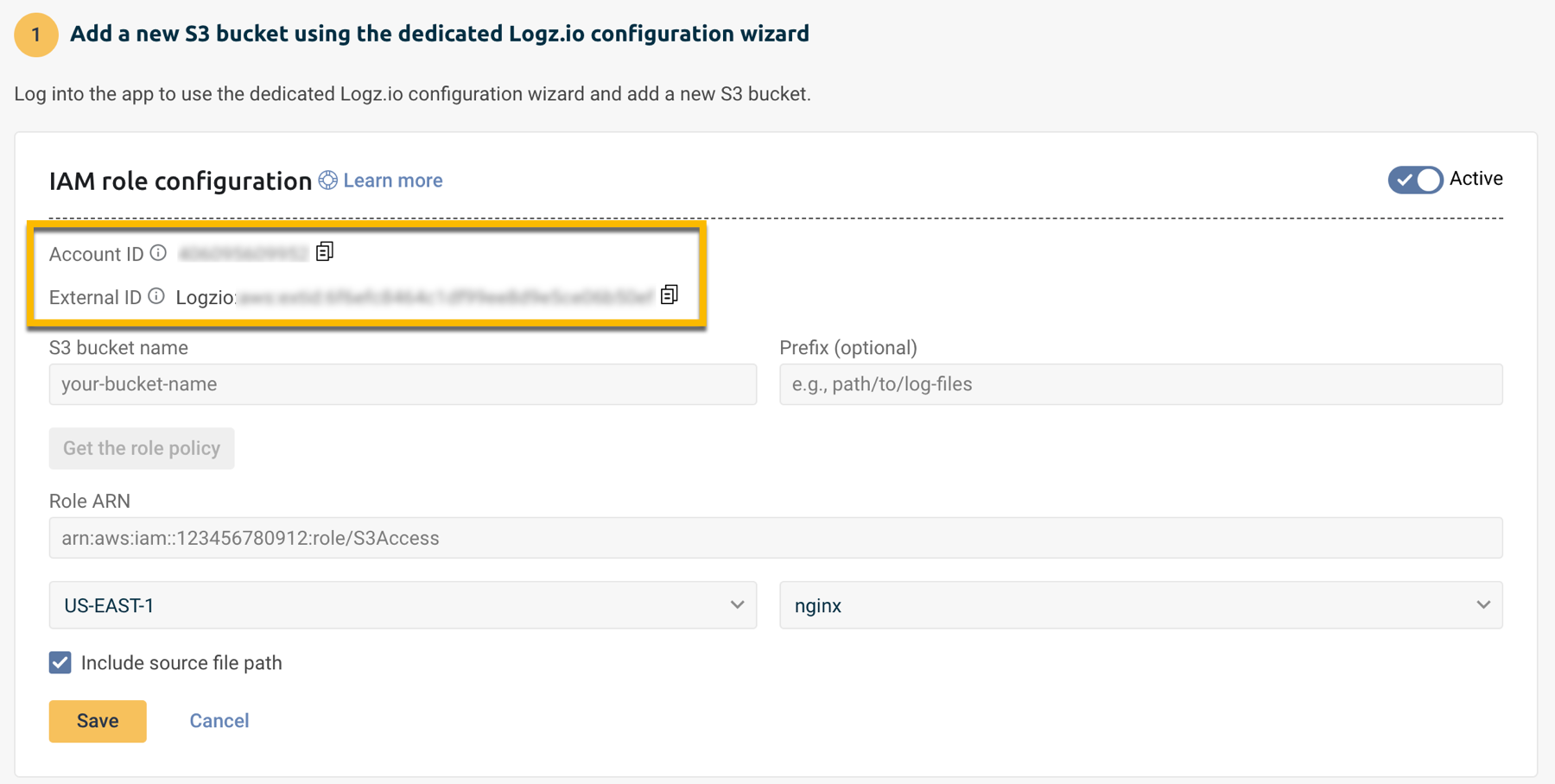

Add a new S3 bucket using the dedicated Logz.io configuration wizard

Log into the app to use the dedicated Logz.io configuration wizard and add a new S3 bucket.

- Click + Add a bucket

- Select IAM role as your method of authentication.

The configuration wizard will open.

- Select the hosting region from the dropdown list.

- Provide the S3 bucket name

- Optional You have the option to add a prefix.

- Choose whether you want to include the source file path. This saves the path of the file as a field in your log.

- Save your information.

Logz.io fetches logs that are generated after configuring an S3 bucket. Logz.io cannot fetch old logs retroactively.

Enable Logz.io to access your S3 bucket

Logz.io will need the following permissions to your S3 bucket:

- s3:ListBucket - to know which files are in your bucket and to thereby keep track of which files have already been ingested

- s3:GetObject - to download your files and ingest them to your account

To do this, add the following to your IAM policy:

{

"Version": "2012-10-17",

"Statement": [

{

"Sid": "VisualEditor0",

"Effect": "Allow",

"Action": [

"s3:GetObject",

"s3:ListBucket",

"s3:GetBucketLocation",

"kms:Decrypt"

],

"Resource": [

"arn:aws:s3:::<BUCKET_NAME>",

"arn:aws:s3:::<BUCKET_NAME>/*",

"<<ARN_OF_THE_KMS_KEY_ROLE>>"

]

}

]

}

- Replace

<BUCKET_NAME>with the name of your S3 bucket. - Replace

<<ARN_OF_THE_KMS_KEY_ROLE>>with your ARN of the KMS key role.

Note that the ListBucket permission is set to the entire bucket and the GetObject permission ends with a /* suffix, so we can get files in subdirectories.

Create a Logz.io-AWS connector

In your Logz.io app, go to Integration hub and select the relevant AWS resource.

Inside the integration, click + Add a bucket and select the option to Authenticate with a role

Copy and paste the Account ID and External ID in your text editor.

Fill in the form to create a new connector.

Enter the S3 bucket name and, if needed, the Prefix where your logs are stored.

Click Get the role policy. You can review the role policy to confirm the permissions that will be needed. Paste the policy in your text editor.

Keep this information available so you can use it in AWS.

Choose whether you want to include the source file path. This saves the path of the file as a field in your log.

Create the policy

Navigate to IAM policies and click Create policy.

In the JSON tab, replace the default JSON with the policy you copied from Logz.io.

Click Next to continue.

Give the policy a Name and optional Description, and then click Create policy.

Remember the policy's name—you'll need this in the next step.

Return to the Create role page.

Create the IAM Role in AWS

Go to your IAM roles page in your AWS admin console.

Click Create role to open the Create role wizard.

Click AWS Account > Another AWS account.

Paste the Account ID you copied from Logz.io.

Select Require external ID, and then paste the External ID you've copied and saved in your text editor.

Click Next: Permissions to continue.

Attach the policy to the role

Refresh the page, and then type your new policy's name in the search box.

Find your policy in the filtered list and select its check box.

Click Next to review the new role.

Finalize the role

Give the role a Name and optional Description. We recommend beginning the name with "logzio-" so that it's clear you're using this role with Logz.io.

Click Create role when you're done.

Copy the ARN to Logz.io

In the IAM roles screen, type your new role's name in the search box.

Find your role in the filtered list and click it to go to its summary page.

Copy the role ARN (top of the page). In Logz.io, paste the ARN in the Role ARN field, and then click Save.

Check Logz.io for your logs

Give your logs some time to get from your system to ours, and then open Open Search Dashboards.

If you still don't see your logs, see log shipping troubleshooting.

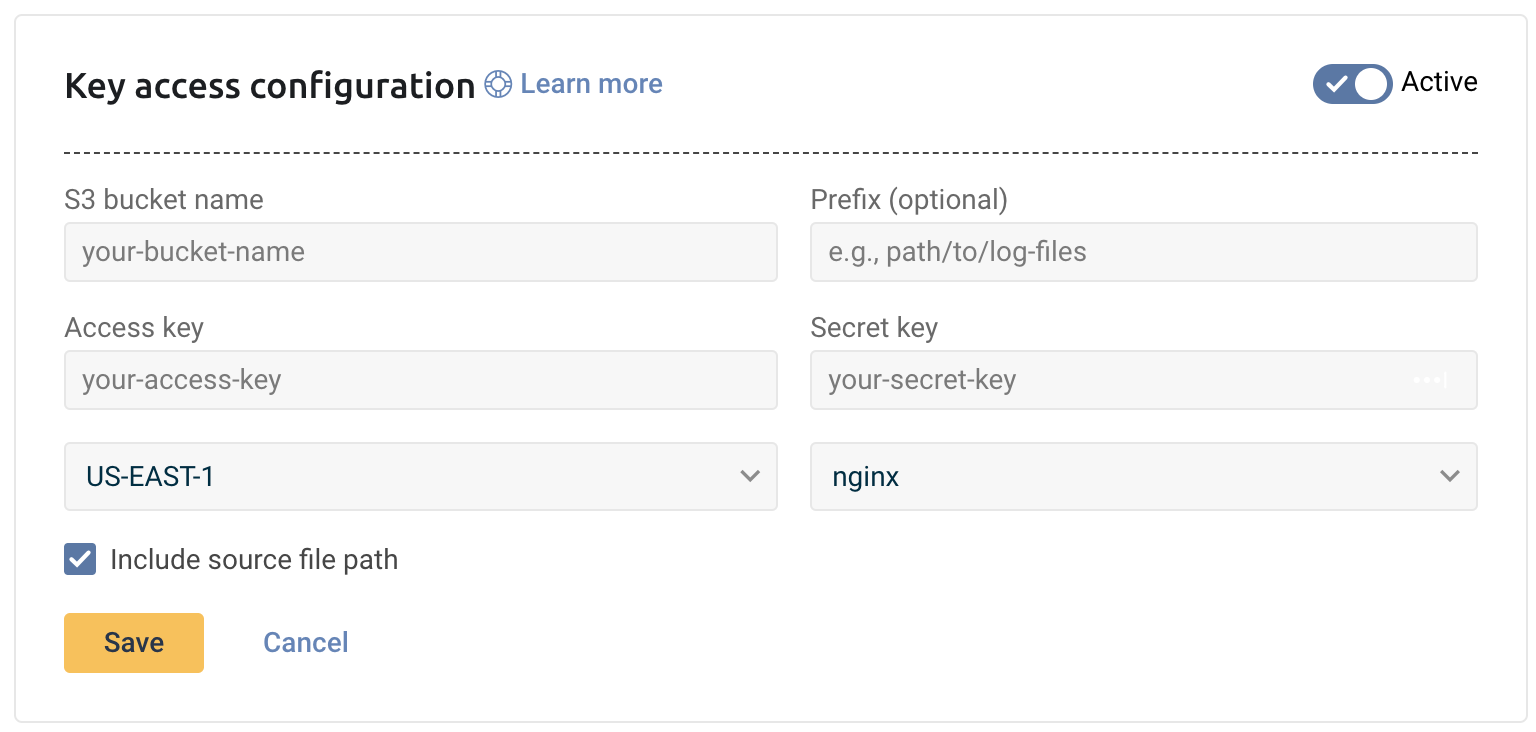

Add buckets directly from Logz.io

You can add your buckets directly from Logz.io by providing your S3 credentials and configuration.

Configure Logz.io to fetch logs from an S3 bucket

Add a new S3 bucket using the dedicated Logz.io configuration wizard

Log into the app to use the dedicated Logz.io configuration wizard and add a new S3 bucket.

- Click + Add a bucket

- Select access keys as the method of authentication.

The configuration wizard will open.

- Select the hosting region from the dropdown list.

- Provide the S3 bucket name

- Optional You have the option to add a prefix.

- Choose whether you want to include the source file path. This saves the path of the file as a field in your log.

- Save your information.

Logz.io fetches logs that are generated after configuring an S3 bucket. Logz.io cannot fetch old logs retroactively.

Enable Logz.io to access your S3 bucket

Logz.io will need the following permissions to your S3 bucket:

- s3:ListBucket - to know which files are in your bucket and to thereby keep track of which files have already been ingested

- s3:GetObject - to download your files and ingest them to your account

To do this, add the following to your IAM policy:

{

"Version": "2012-10-17",

"Statement": [

{

"Effect": "Allow",

"Action": [

"s3:ListBucket"

],

"Resource": [

"arn:aws:s3:::<BUCKET_NAME>"

]

},

{

"Effect": "Allow",

"Action": [

"s3:GetObject"

],

"Resource": [

"arn:aws:s3:::<BUCKET_NAME>/*"

]

}

]

}

- Replace

<BUCKET_NAME>with the name of your S3 bucket.

Note that the ListBucket permission is set to the entire bucket and the GetObject permission ends with a /* suffix, so we can get files in subdirectories.

Create the user

Browse to the IAM users and click Create user.

Assign a User name.

Under Select AWS access type, select Programmatic access.

Click Next: Permissions to continue.

Create the policy

In the Set permissions section, click Attach existing policies directly > Create policy. The Create policy page loads in a new tab.

Set these permissions:

- Service: Choose S3

- Actions: Select List > ListBucket and Read > GetObject

- Resources > bucket: Click Add ARN to open the Add ARN dialog. Type the intended Bucket name, and then click Add.

- Resources > object: Click Add ARN to open the Add ARN(s) dialog. Add the intended Bucket name, then select Object name > Any. Click Add.

Click Review policy to continue.

Give the policy a Name and optional Description, and then click Create policy.

Remember the policy's name—you'll need this in the next step.

Close the tab to return to the Add user page.

Attach the policy to the user

Refresh the page, and then type your new policy's name in the search box.

Find your policy in the filtered list and select its check box.

Click Next: Tags, and then click Next: Review to continue to the Review screen.

Finalize the user

Give the user a Name and optional Description, and then click Create user.

You're taken to a success page.

Add the bucket to Logz.io

Add the S3 bucket name and Prefix

Copy the Access key ID and Secret access key, or click Download .csv.

In Logz.io, paste the Access key and Secret key, and then click Save.

Check Logz.io for your logs

Give your logs some time to get from your system to ours, and then open Open Search Dashboards.

If you still don't see your logs, see log shipping troubleshooting.

Troubleshooting

Migrating IAM roles to a new external ID

If you previously set up an IAM role with your own external ID, we recommend updating your Logz.io and AWS configurations to use a Logz.io-generated external ID. This adds security to your AWS account by removing the predictability of any internal naming conventions your company might have.

Before you migrate, you'll need to know where the existing IAM role is used in Logz.io. This is because you'll need to replace any S3 fetcher and Archive & restore configurations that use the existing role.

- If the role is used in a single Logz.io account: You can update the external ID and replace current Logz.io configurations. See Migrate to the Logz.io external ID in the same role (below).

- If the role is used with multiple Logz.io accounts: You'll need to create a new role for each account and replace current Logz.io configurations. See Migrate to new IAM roles (below).

Migrate to the Logz.io external ID in the same role

In this procedure, you'll:

- Replace Logz.io configurations to use the new external ID

- Update the external ID in your IAM role's trust policy

Follow this process only if the IAM role is used in a single Logz.io account.

When you update your IAM role to the Logz.io external ID, all Logz.io configurations that rely on that role will stop working. Before you begin, make sure you know everywhere your existing IAM role is used in Logz.io.

Delete an S3 configuration from Logz.io

Choose an S3 fetcher or Archive & restore configuration to replace.

Copy the S3 bucket name and Role ARN to your text editor, and make a note of the Bucket region. If this is an S3 fetcher, copy the path Prefix as well, and make a note of the Log type.

Delete the configuration.

Replace the configuration

If this is for an S3 fetcher, click Add a bucket, and click Authenticate with a role.

Recreate your configuration with the values you copied in a previous step, and copy the External ID (you'll paste it in AWS in the next step).

Replace the external ID in your IAM role

Browse to the IAM roles page. Open the role used by the configuration you deleted in step 1.

Open the Trust relationships tab and click Edit trust relationship to open the policy document JSON.

Find the line with the key sts:ExternalId,

and replace the value with the Logz.io external ID you copied in step 2.

For example,

if your account's external ID is

logzio:aws:extid:example0nktixxe8q,

you would see this:

"sts:ExternalId": "logzio:aws:extid:example0nktixxe8q"

Saving the trust policy at this point will immediately change your role's external ID. Any other Logz.io configurations that use this role will stop working until you update them. :::

Click Update Trust Policy to use the Logz.io external ID for this role.

Save the new S3 configuration in Logz.io

Save the configuration in Logz.io:

- For an S3 fetcher: Click Save

- For Archive & restore: Click Start archiving

You'll see a success message if Logz.io authenticated and connected to your S3 bucket.

If the connection failed, double-check your credentials in Logz.io and AWS.

(If needed) Replace other configurations that use this role

If there are other S3 fetcher or Archive & restore configurations in this account that use the same role, replace those configurations with the updated external ID.

Logz.io generates one external ID per account, so you won't need to change the role again.

Migrate to new IAM roles

In this procedure, you'll:

- Create a new IAM role with the new external ID

- Replace Logz.io configurations to use the new role

You'll repeat this procedure for each Logz.io account where you need to fetch or archive logs in an S3 bucket.

Delete an S3 configuration from Logz.io

Choose an S3 fetcher or Archive & restore configuration to replace.

Copy the S3 bucket name to your text editor, and make a note of the Bucket region. If this is an S3 fetcher, copy the path Prefix as well, and make a note of the Log type.

Delete the configuration.

Replace the configuration

If this is for an S3 fetcher, click Add a bucket, and click Authenticate with a role.

Recreate your configuration with the values you copied in step 1, and copy the External ID (you'll paste it in AWS later).

Set up your new IAM role

Using the information you copied in step 1, follow the steps in Grant access to an S3 bucket (near the top of this page).

Continue with this procedure when you're done.

(If needed) Replace other configurations that use this role

If there are other S3 fetcher or Archive & restore configurations in this account that use the same role, repeat steps 1 and 2, and use the role ARN from step 3.

For configurations in other Logz.io accounts, repeat this procedure from the beginning.

Testing IAM Configuration

After setting up s3:ListBucket and s3:GetObject permissions, you can test the configuration as follows.

Install s3cmd

For Linux and Mac:

Download the .zip file from the master branch of the s3cmd GitHub repository.

For Windows:

Download s3cmd express.

Note that s3cmd will usually prefer your locally-configured s3 credentials over the ones that you provide as parameters. So, either backup your current s3 access settings, or use a new instance or Docker container.

Configure s3cmd

Run s3cmd --configure and enter your Logz.io IAM user access and secret keys.

List a required bucket

Run s3cmd ls s3://<BUCKET_NAME>/<BUCKET_PREFIX>/. Replace <BUCKET_NAME> with the name of your s3 bucket and <BUCKET_PREFIX> with the bucket prefix, if the prefix is required.

Get a file from the bucket

Run s3cmd get s3://<BUCKET_NAME>/<BUCKET_PREFIX>/<OBJECT_NAME>. Replace <BUCKET_NAME> with the name of your s3 bucket, <BUCKET_PREFIX> with the bucket prefix and <OBJECT_NAME> with the name of the file you want to retrieve.

Shipping logs via S3 Hook

Create new stack

To deploy this project, click the button that matches the region you wish to deploy your Stack to:

Specify stack details

Specify the stack details as per the table below, check the checkboxes and select Create stack.

| Parameter | Description | Required/Default |

|---|---|---|

logzioListener | The Logz.io listener URL for your region. (For more details, see the regions page | Required |

logzioToken | Your Logz.io log shipping token. | Required |

logLevel | Log level for the Lambda function. Can be one of: debug, info, warn, error, fatal, panic. | Default: info |

logType | The log type you'll use with this Lambda. This is shown in your logs under the type field in OpenSearch Dashboards. Logz.io applies parsing based on the log type. | Default: s3_hook |

pathsRegexes | Comma-seperated list of regexes that match the paths you'd like to pull logs from. | - |

pathToFields | Fields from the path to your logs directory that you want to add to the logs. For example, org-id/aws-type/account-id will add each of the fields ord-id, aws-type and account-id to the logs that are fetched from the directory that this path refers to. | - |

Add trigger

Give the stack a few minutes to be deployed.

Once your Lambda function is ready, you'll need to manually add a trigger. This is due to Cloudformation limitations.

Go to the function's page, and click on Add trigger.

Then, choose S3 as a trigger, and fill in:

- Bucket: Your bucket name.

- Event type: Choose option

All object create events. - Prefix and Suffix should be left empty.

Confirm the checkbox, and click *Add.

Send logs

That's it. Your function is configured. Once you upload new files to your bucket, it will trigger the function, and the logs will be sent to your Logz.io account.

Parsing

S3 Hook will automatically parse logs in the following cases:

- The object's path contains the phrase

cloudtrail(case insensitive).

Check Logz.io for your logs

Give your logs some time to get from your system to ours, and then open OpenSearch Dashboards.

If you still don't see your logs, see Log shipping troubleshooting.

Metrics

Deploy this integration to send your Amazon S3 metrics to Logz.io.

This integration creates a Kinesis Data Firehose delivery stream that links to your Amazon S3 metrics stream and then sends the metrics to your Logz.io account. It also creates a Lambda function that adds AWS namespaces to the metric stream, and a Lambda function that collects and ships the resources' tags.

Log in to your Logz.io account and navigate to the current instructions page inside the Logz.io app. Install the pre-built dashboard to enhance the observability of your metrics.

To view the metrics on the main dashboard, log in to your Logz.io Metrics account, and open the Logz.io Metrics tab.

Before you begin, you'll need:

- An active account with Logz.io

Configure AWS to forward metrics to Logz.io

Create Stack in the relevant region

To deploy this project, click the button that matches the region you wish to deploy your Stack to:

Specify stack details

Specify the stack details as per the table below, check the checkboxes and select Create stack.

| Parameter | Description | Required/Default |

|---|---|---|

logzioListener | The Logz.io listener URL for your region. (For more details, see the regions page. For example - https://listener.logz.io:8053 | Required |

logzioToken | Your Logz.io metrics shipping token. | Required |

awsNamespaces | Comma-separated list of the AWS namespaces you want to monitor. See this list of namespaces. If you want to automatically add all namespaces, use value all-namespaces. | At least one of awsNamespaces or customNamespace is required |

customNamespace | A custom namespace for CloudWatch metrics. This is used to specify a namespace unique to your setup, separate from the standard AWS namespaces. | At least one of awsNamespaces or customNamespace is required |

logzioDestination | Your Logz.io destination URL. | Required |

httpEndpointDestinationIntervalInSeconds | The length of time, in seconds, that Kinesis Data Firehose buffers incoming data before delivering it to the destination. | 60 |

httpEndpointDestinationSizeInMBs | The size of the buffer, in MBs, that Kinesis Data Firehose uses for incoming data before delivering it to the destination. | 5 |

Check Logz.io for your metrics

Give your data some time to get from your system to ours, then log in to your Logz.io Metrics account, and open the Logz.io Metrics tab.

Log in to your Logz.io account and navigate to the current instructions page inside the Logz.io app. Install the pre-built dashboard to enhance the observability of your metrics.

To view the metrics on the main dashboard, log in to your Logz.io Metrics account, and open the Logz.io Metrics tab.